The AI productivity tracker that

helps you focus

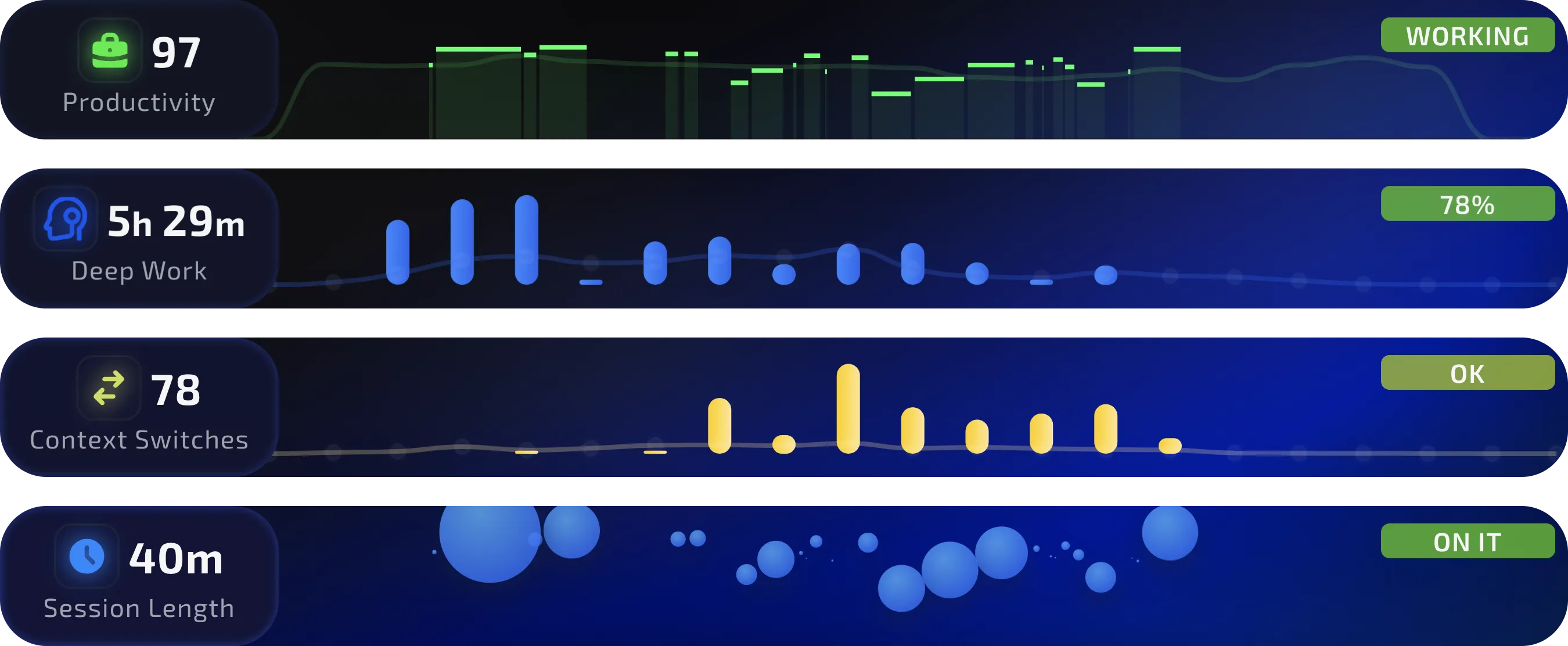

Designed to help founders and makers do deep work.

We launched today!

Wall of love

Magicflow helps amazing people get work done

What I love about Magicflow is that it's actionable. Other time tracking apps just display your

data.

Magicflow goes one step further and tells you what you can do to become more

productive.

None of the the productivity and time-tracking tools out there actually help you

get into flow and

stay in flow the way that Magicflow does. Huge fan!

Magicflow doesn't just show what I've worked on, it also lets me dive into how well I’m working, to

see what’s affecting my focus and make tweaks to my schedule.

Track what's

distracting you

Get real with what’s distracting you from your goals.

Start a

focus session to zone in.

%20(1).webp)

Live flow timers

for focus sessions

Pomodoro timers, distraction warnings.

A glowing flow meter that you’ll want to

fill up.

%20(1).webp)

Free to try.

Affordable for pros.

Free for 30 days

For getting started

$0

30 day free trial

1 month free

Pro

Full of powerful features

Download now

No-questions-asked refunds

Pre-seed founder, student, and hardship discounts available.

%20(1).webp)

%20(1).webp)